Dashboard Update: From Agent Framework to One Prompt, Saving $1,700/Month

February 21, 2026

This is Part 5 in a series about a LED diabetes dashboard I built for my daughter Abigail. It shows her glucose, insulin, and weather on a 64x64 display, with an AI agent that offers short supportive observations. Part 1 covers building it. Part 2 adds insulin tracking. Part 3 introduces the AI insights agent. Part 4 refines it after a stressful night.

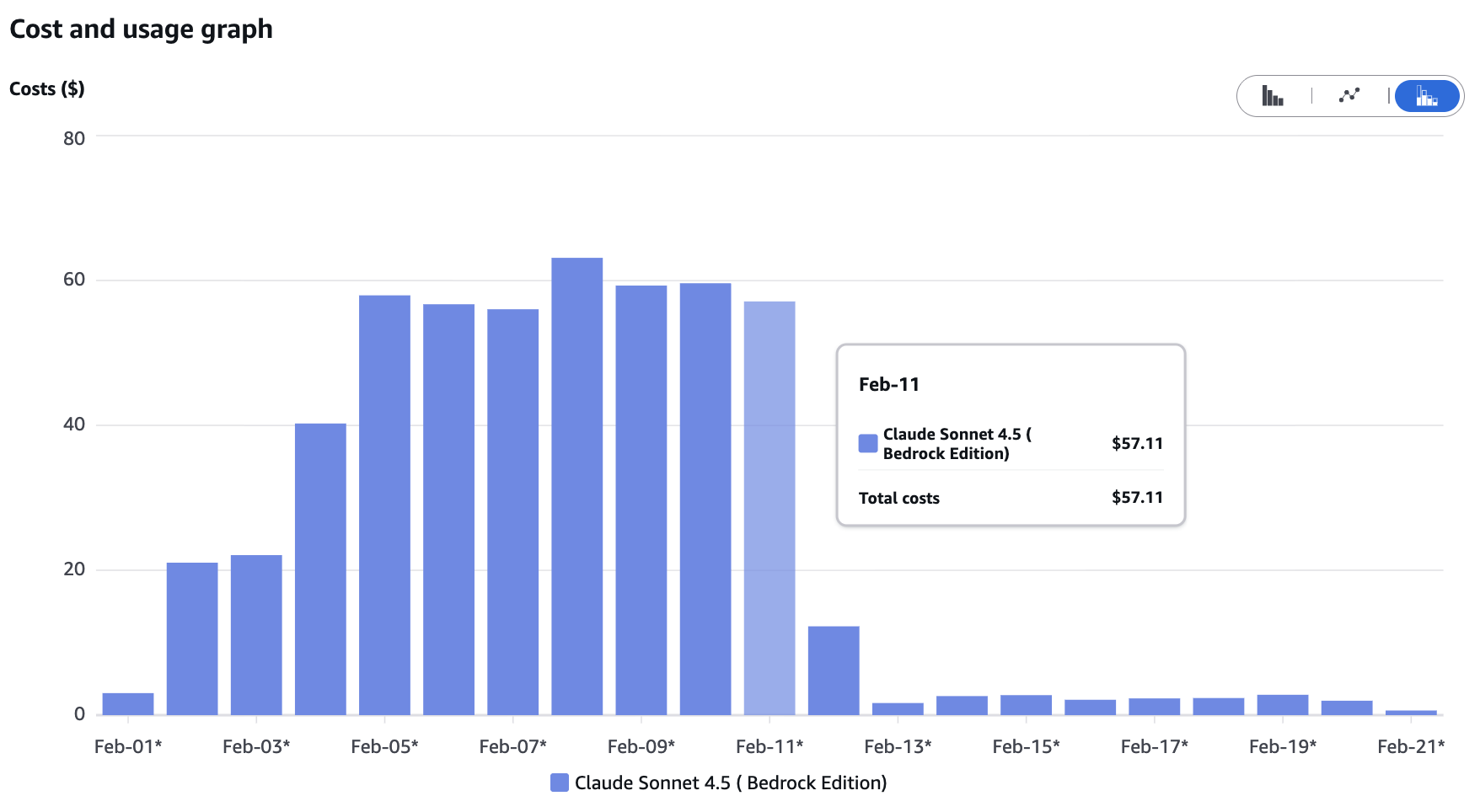

I used an agent framework where a single prompt would have done the job. The overkill was on track to cost me $1,700/month.

I built the insights agent using AWS Bedrock Agents. Bedrock Agents is a framework for building autonomous AI workflows where the model decides which tools to call, in what order, based on a conversation. It’s great for complex, fluid tasks where the reasoning path isn’t known in advance.

My task was not complex or fluid. I needed to take diabetes data, inject it into a prompt, and get a 30-character insight for an LED display. The same data, the same prompt structure, every time. A one-shot inference call.

Instead, I built a multi-turn agent with four action groups, OpenAPI schemas, IAM roles for each tool, and agent alias versioning. The agent would decide to call getRecentGlucose, then getDailyStats, then getRecentTreatments, then getInsightHistory, then storeInsight. Each roundtrip resent the full prompt and accumulated context. Five calls, each one bigger than the last.

One Prompt Instead of Five

The fix was simple. Instead of letting the agent orchestrate its own data gathering, each Lambda pre-fetches the data it needs from DynamoDB, formats everything into a single prompt, and calls Claude once via InvokeModel. One request, one response.

I (with Claude Code) replaced the entire Bedrock Agent framework with a shared invoke-model.ts utility of about 80 lines. Deleting 2,559 lines of code is always satisfying.

Bedrock Agents are super cool. I’ll definitely build more agents on this service where I have more ambiguous tasks. But for a predictable prompt/response cycle where I know exactly what data the model needs, one-shot inference worked way better. Faster, cheaper, and about 80 lines of code instead of 2,559.

Post-deploy results after a full day of production data: zero errors, 32 insights generated, quality unchanged. Cost: roughly $1/day, down from $58/day.

Rate Limiting

The architecture wasn’t the only inefficiency. The agent ran on every CGM reading: 288 per day, one every 5 minutes. Most invocations replaced a still-relevant insight minutes later.

I replaced the 5-minute debounce with smart triggers that only generate a new insight when something actually changes: enough time has passed, glucose moves significantly, or glucose crosses a zone boundary. This cut invocations from 288/day to about 36.

I also tried switching from Claude Sonnet 4.5 to Haiku 4.5, figuring short LED insights didn’t need the bigger model. That didn’t go well, at all. Haiku hallucinated constantly. “Steady drop since 8pm” when the data showed 25 minutes of decline. “Great overnight!” when she’d been high for hours. It would confidently describe glucose patterns that weren’t in the data at all. For anything involving medical data, even short observations, grounding matters more than speed. I switched back to Sonnet the same night.

The nice thing: with rate limiting cutting volume by 87%, Sonnet at 36 invocations/day cost less than Haiku at 288/day. I didn’t have to compromise on quality to fix the cost problem.

| Configuration | Invocations/day | Cost/day | Cost/month |

|---|---|---|---|

| Bedrock Agent + 5min debounce | ~288 | ~$58 | ~$1,751 |

| Bedrock Agent + rate limiting | ~36 | ~$7 | ~$210 |

| One-shot inference + rate limiting | ~36 | ~$1 | ~$28 |

Quality Fixes

I fixed several quality issues along the way.

Repetition. I analyzed 2,477 insights over a week and found a 54% repeat rate. “Best day this week!” appeared 260 times. The agent was copying example phrases from its prompt verbatim. I removed the examples, banned generic praise, and added a storage-layer dedup that rejects exact duplicates within a 6-hour window.

Timezone. Lambda runs in us-east-1, and the agent was using UTC hours from Date.getHours(). Noon Pacific is 8 PM UTC. The agent would say “evening going well!” at lunchtime. Fixed with Intl.DateTimeFormat using America/Los_Angeles.

What It Looks Like Now

The display looks the same. Same 30-character insights in green, yellow, red, and rainbow. Same supportive voice noticing patterns. Abigail doesn’t know anything changed, which is exactly right.

The original dashboard ran for $4/month. The insights agent blew that up to $1,700/month. Now it’s about $30/month total: the original infrastructure plus one-shot inference at a sensible rate. The codebase is 2,559 lines lighter, the insights are less repetitive, and the agent knows what time zone it’s in.

Still open source on GitHub.

– John