Dashboard Update: Teaching the Agent to Think Like We Do

February 3, 2026

This is Part 4. Part 1 covers building the dashboard. Part 2 adds insulin tracking. Part 3 introduces the AI insights agent.

Yesterday I wrote about adding an AI insights agent to the diabetes dashboard. Getting it to sound human was the hard part. Since then, I’ve been teaching it to think more like we do.

Tonight

Abigail broke routine after school. A new dance class and a goldfish snack were all it took to spike high. It happens. We loaded her up with insulin all evening to bring her back down. After she went to bed, it became clear we’d overshot the correction. Also happens. Normally not a huge deal, we just had to wake her up and give her some sugar.

The first wakeup went fine. She took the juice, we waited. But it wasn’t enough. She kept dropping.

By the second wakeup she was low enough to feel it. Disoriented, uncooperative, and very unhappy to be woken up. This is when it gets scary. We had to hold her down and push honey over her screams. Sometimes it’s not gentle coaxing and juice boxes. Sometimes it’s restraining your screaming kid because the alternative is worse.

Eventually the sugar kicked in. She rebounded hard, then leveled off. Actually stable.

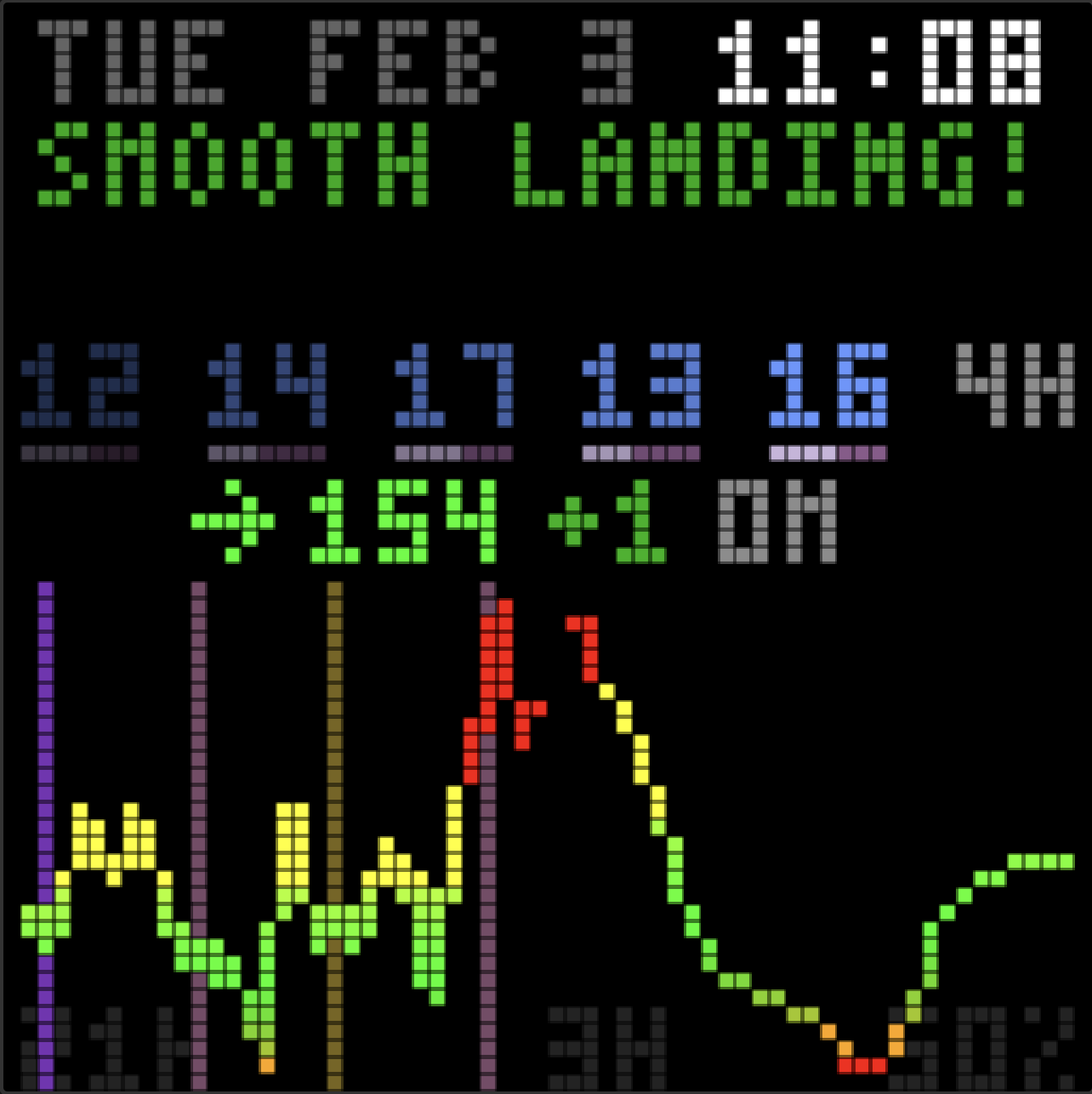

After she started to come up, the display said “SMOOTH LANDING!” Only it wasn’t. Not yet. She was barely out of the woods and climbing fast. The insight was wrong, the opposite of useful after a stressful situation.

The Gap

The obvious problem: no intelligence about rate of change. The insights were over-indexed on the current value, not the trend.

After treating a low with sugar, glucose often spikes. Easy to overcorrect. A rise from 78 to 105 in a few readings isn’t a “smooth landing.” It’s a rebound that might overshoot high. The agent was celebrating these rebounds. “Coming up nicely!” when the trajectory suggested she’d blow past 200.

The same problem works in reverse. A glucose reading of 115 and dropping doesn’t tell you much by itself. But 115 dropping and slowing down is good news. That’s a landing. 115 dropping and speeding up is a problem. Same number, opposite actions. The agent would see a drop from 150 to 130 to 120 to 115 and say “Still dropping, eat!” That’s false urgency. The drop is decelerating. She’s leveling off.

What “Stable” Actually Means

A subtler problem: the agent didn’t know what stable really means.

It was saying “leveling off nicely!” when glucose was still drifting toward a low. 80 and dropping slowly isn’t stable. It’s still dropping. But the agent saw the number in range and celebrated.

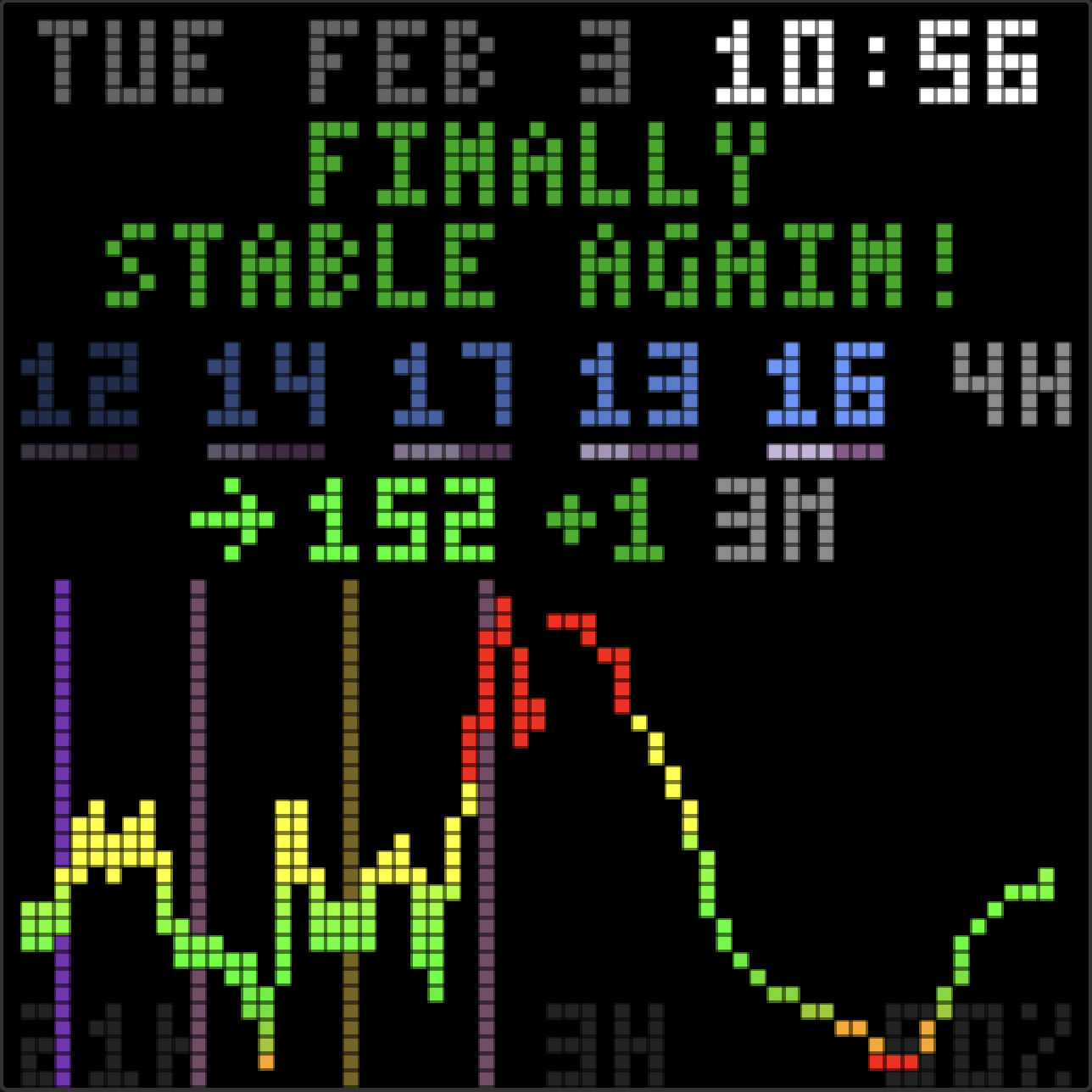

This matters most near the edges. At 78 and still drifting down, even slowly, “leveling off” creates false confidence. Stable means truly flat readings: two to three consecutive readings within ±3 mg/dL. That’s when “FINALLY STABLE AGAIN!” actually means something.

How the Refinements Happen

So I fired up Claude Code and together we refined the agent prompt to take all of this into account.

The cycle: I see a situation, note what the insight should have been, and Claude iterates on the prompt.

The agent says “Smooth landing!” during a steep rebound. Should have been: “Coming up fast, watch it.” Claude adds guidance about post-low spikes and overshoot risk.

The agent says “Leveling off!” while still drifting down. Should have been: “Still drifting, more?” Claude adds guidance that stable means flat for 2-3 readings.

The agent creates false urgency during a decelerating drop. Should have been: “Leveling off nicely!” Claude adds guidance to factor in acceleration, not just direction.

It’s iterative. The prompt grows more specific with each edge case, with explicit FORBIDDEN examples for each failure mode.

Capturing the Reasoning

To make this cycle easier, I have the agent record its thinking with each insight so I can review it later and iterate accordingly.

Bedrock Agents don’t enforce required parameters, so even with emphatic prompts the agent wasn’t passing reasoning through the tool. But it does explain itself in its conversational response. So I parse that out and store it alongside the insight.

Insight: "[green]Leveling off nicely![/]"

Reasoning: Glucose dropped from high→less high→almost normal over

the past hour, but the rate is decelerating. Each reading shows a

smaller drop than the last. This is a landing pattern, not a

continuing fall. Celebrating the deceleration, not warning about

the direction.

Insight: "[yellow]Coming up fast![/]"

Reasoning: Post-low rebound in progress. Glucose was 72 twenty

minutes ago, now 118 and still climbing steeply. This +46 rise

suggests overcorrection from treatment. Not celebrating yet

because trajectory points toward overshoot into high territory.

Insight: "[yellow]Still drifting, more?[/]"

Reasoning: Glucose at 81, down from 88 and 94. Still dropping

about 6-7 per reading. Not flat yet. Near the low threshold so

caution warranted. Suggesting additional carbs rather than

celebrating stability that hasn't arrived.

Now I can review not just what the agent said, but why it said it. Useful for debugging and for understanding when the logic needs refinement.

The Result

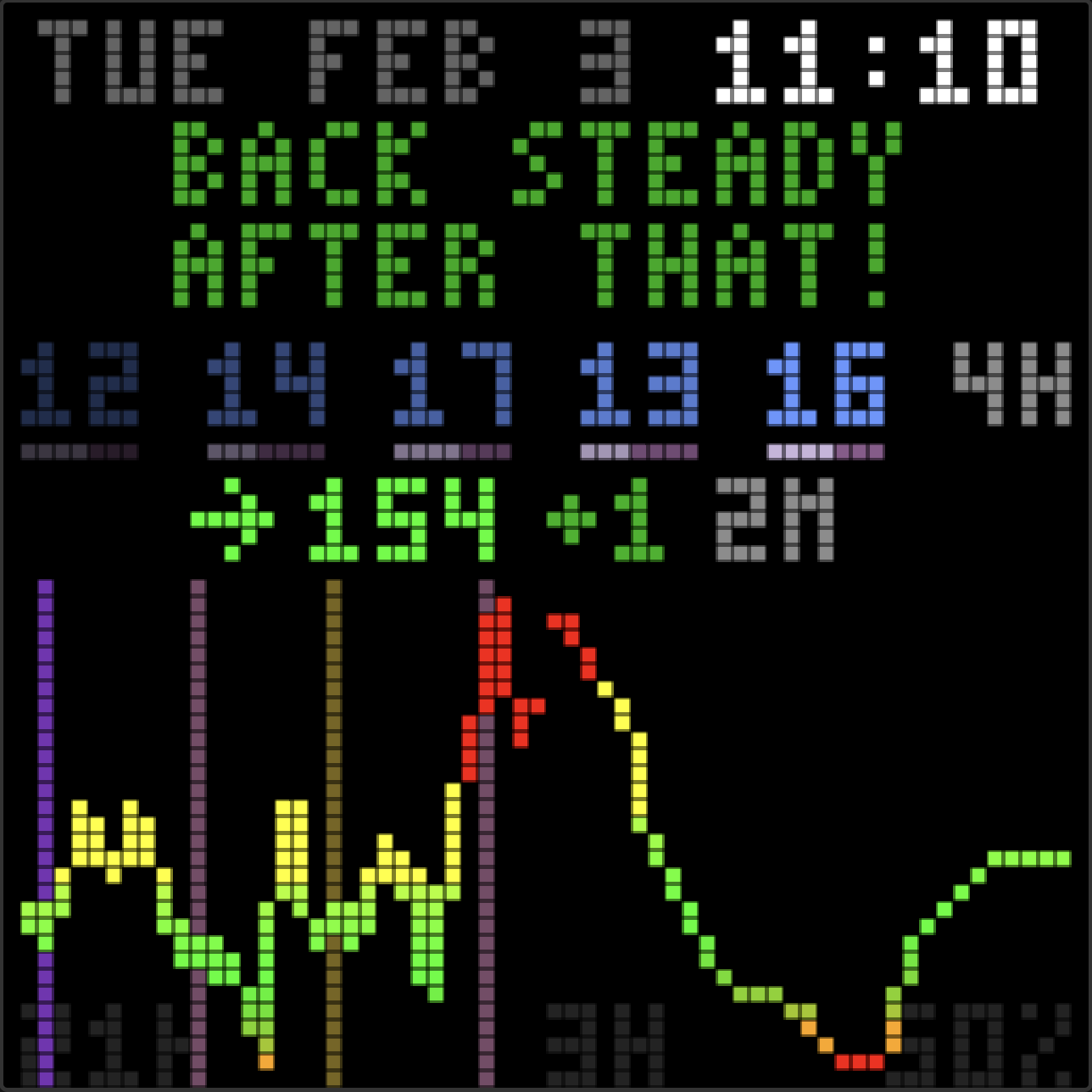

“BACK STEADY AFTER THAT!” acknowledges the journey. The agent saw the red spike in the chart, watched the recovery, and waited for truly flat readings before celebrating. It won’t confuse a rebound for a landing. It won’t panic during a decelerating drop. And it won’t claim stable when the drop keeps going slowly, like tonight.

That’s what I wanted: an agent that thinks about the situation the way we do.

I sure wish I could get real-time insulin data. The pump knows how much insulin is “on board” in these situations, which would help predict where things are headed. But Glooko syncs are delayed several hours. Something I’ll add the minute I can get my hands on real-time pump data. (Please please please give me API access to this, Insulet!)

Still open source on GitHub.

– John